Resources

| Main Server | ||

| Model | : | HPE ProLiant DL380 Gen9 |

| Processor | : |

2x Intel(R) Xeon(R) CPU E5-2630 v4

(10 core, 2.20 GHz, 25MB, 85W) |

| Graphical Processing Unit | : | NVIDIA Tesla P100 3584 CUDA cores |

| Memory | : | 212GB |

| Storage | : | RAID 5 configuration 7.37TB 10000rpm SAS |

| Operating System | : | Ubuntu Server 16.04 |

| Frameworks | : |

|

| Backup Server | ||

| Model | : | HPE ProLiant ML350 Gen9 |

| Processor | : |

Intel(R) Xeon(R) CPU E5-2620 v4

(8 core, 2.10 GHz, 20MB, 85W) |

| Graphical Processing Unit | : | NVIDIA Tesla P4 2560 CUDA cores |

| Memory | : | 157GB |

| Storage | : | 25.57TB 7200rpm SAS |

| Operating System | : | Ubuntu Server 16.04 |

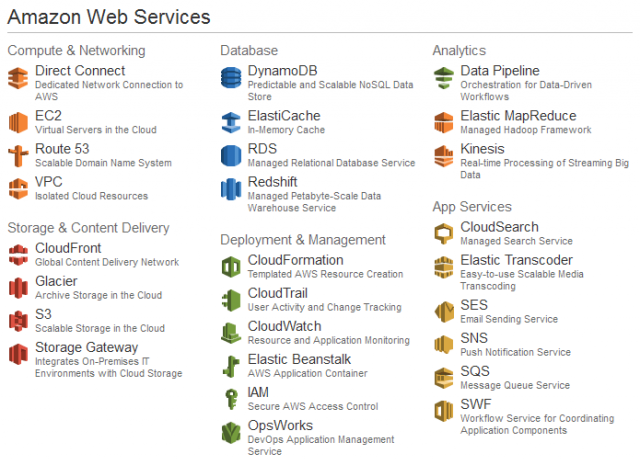

| Cloud Computing | ||

|

||

| GPUs | ||

| NVIDIA Tesla P40 (to be installed in main server) | ||

HPC Usage Code of Conduct

- Users are required to fill out the server usage request form at the following link:

🔗 https://s.id/request-server-AIRDC - Each submission is valid for one specific job only (e.g., model training, dataset inference), not an entire project.

- Scheduling is conducted on business days by the AIRDC team, considering factors such as queue length, project category, and job size. The current queue status can be viewed here:

🔗 https://s.id/server-queue-AIRDC - Users will receive a confirmation email containing SSH access instructions one business day after scheduling is completed.

- The maximum usage duration for each job is 2 days (48 hours).

- If there is no response within 10 business days after the usage period ends, AIRDC will send a follow-up confirmation email. If the job is still ongoing, users must resubmit the form within 1 day to rejoin the queue.

Without confirmation, all user data will be deleted from the server.

Server Specifications:

2 × Intel® Xeon® CPU E5-2630 v4 @ 2.20GHz

1 × NVIDIA Tesla P100, CUDA 10.1

212 GB RAM

Additional Notes:

- Server access is supported only via Command Line Interface (CLI) through SSH. Graphical User Interface (GUI) is not supported.

- Users are required to test their code beforehand on a local machine or Google Colab.

- The server environment is Docker-based (containerized), isolated, and customizable by the user. The maximum storage allocation per user is 150 GB.

Acknowledgement:

It is mandatory to include the attribution “NVIDIA – BINUS AI R&D Center” in every scientific publication (journal and conference) resulting from the use of this server.

Example:

Contact:

For further inquiries, please contact:

- Kuncahyo Setyo Nugroho – kuncahyo.nugroho@binus.edu

-

Mahmud Isnan – mahmud.isnan@binus.edu